How LLMs and Semantic AI Will Transform Your Enterprise Data Game

This is How Large Language Models Can Help You Manage and Analyze Enterprise Data in 2024 and Beyond

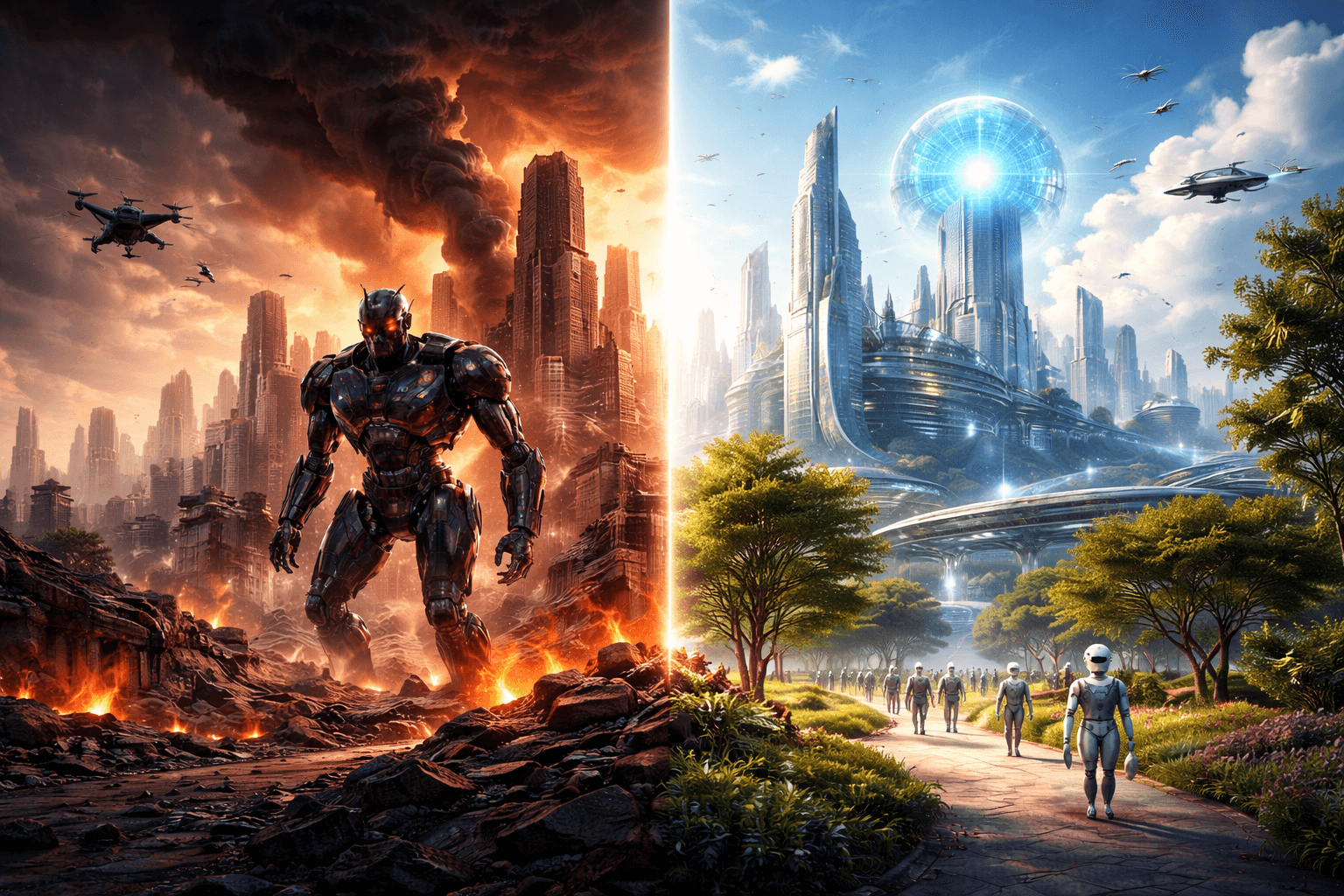

In the dynamic world of organizational data, we are witnessing the dawn of a revolutionary era, marked by the integration of large language models (LLMs) in data management and analytics workflows. This transformative journey, spanning the next decade, promises to redefine our interaction with business data, blending sophistication with user-centric experience.

Picture an environment where posing a business data question within your application is seamlessly translated into a data query, executed on the right data, and the results presented within the same interface as visual storytelling. This advanced interaction heralds a new era of data accessibility and usability.

Will it happen tomorrow? Probably not for most organizations for two major reasons. First, business data stored in databases and warehouses mostly lack semantics, And second, we must have mechanisms in place to understand the questions asked in the correct organizational business context and govern their execution.

Below are my predictions about what could happen in the near and far future and how our data practice will benefit from those developments.

Transforming Data Consumer Interactions with LLMs in the Short to Medium Term

The immediate future, spanning the next 3–5 years, is set to witness a significant leap in how we interact with and interpret data. However, this transformative journey isn’t without its challenges.

Let’s assume that the current and very limited capabilities of the LLMs of mathematical analysis will improve rapidly over time. In this context, the famous saying “torture your data long enough, and it will confess to anything” means that at least for the mid-term, human oversight will be crucial in curating and interpreting data, ensuring that the insights derived are accurate and actionable.

What could help? A discipline which is called Semantic AI can be handy:

Semantic AI is a combination of Large Language Models and relational models (graphs), activated through organizational metadata and context-specific training corpus will be able to do the following:

- Prepare your organization for AI/generative AI implementation by making your data landscape semantically meaningful and definition-aligned;

- Understand the question in your organization’s business context;

- Map the question to the correct internal or external data;

- Run the query and narrate the result via decision intelligence supporting storytelling;

- Assist the users in determining which relevant questions to ask further in a specific context.

It might sound like a science fiction story, but the tech is catching up rapidly, and I guarantee you will see scenarios like this sprouting already in 2024 and becoming enterprise-grade in 2025.

A Vision for the Future Around LLMs: Beyond 5 Years

Looking beyond five years, the landscape is set to evolve even further. We envision transitioning to an intuitive, application-agnostic approach where users will simply state their questions or tasks, and the relevant applications or workflows will respond proactively or even be created on the fly. This visionary state will be heavily reliant on achieving seamless inter-application business communication, not only a technical integration but also a challenge that LLMs could address, overcoming current integration barriers.

Empowering Data Producers for a Productive Future

Simultaneously, the impact of LLMs on data producers is set to be transformative. In the near to medium term, substantial productivity gains are anticipated through automation in routine tasks such as script writing, documentation, tests, step-by-step guides, and pipeline creation for data engineers, analysts, and DataOps. This evolution will also encourage efficiency through deduplication and harmonized practices across departments.

As we project further into the future, the infrastructure of data itself will undergo a sea change, moving from rigid, predefined schemas to more dynamic, automated, and flexible implementations, mirroring the evolution seen in business intelligence over recent years. We will not see duplications or monstrous integration projects but will see smart result caching, workflow automation, automatic implementation readjustment and reuse.

Leading the Charge: LLM Use Cases Driving the Future of Data Management

Central to this transformation are key use cases where LLMs will play a pivotal role:

- Automated documentation: streamlining the creation of descriptions for data processes.

- Enhanced auto-tagging: improving the accuracy of data classification, a critical factor in managing sensitive information.

- Semantic discovery: enabling users to search for data elements effortlessly, enhancing accessibility and utilization.

The Strategic Imperative: Extracting Value from LLMs

As we embrace these technological advancements, we must focus on extracting tangible value. Integrating LLMs in data management and analytics is a strategic evolution, not just a technological upgrade. It’s about revolutionizing business interactions with data, making insightful, data-driven decision-making more intuitive and accessible to all. This paradigm shift is about enhancing capabilities and fundamentally transforming the business intelligence and analytics landscape.

In conclusion, the extension of LLMs into enterprise data practice represents a strategic inflection point, heralding a new era in business decision-supporting intelligence. It’s a journey from technology adoption to strategic transformation, redefining how businesses leverage data for decision-making and innovation.

Want to understand how to deploy Semantic AI to bring the most out of your data practice?

Drop us a line